How to measure AI progress? what this exponential growth means for how we should be using these tools.

For a long time, we were assessing LLMs - AI models like ChatGPT-4, ChatGPT-5, Gemini 2.5, Gemini 3 - by their ability to solve exams. Math exams. Language exams. Coding tests. We'd grade them like students.

In the beginning, it was ridiculous because they were so stupid. They'd score 47%, 63% on math exams. But very soon after, they started scoring 99%. We had to create more challenging tests because basically all the models became capable of solving the hardest math exams at nearly 100%. They were getting 99%.

We needed something more challenging than the hardest math exam just to differentiate between them. That was a challenge for a long time - we had to keep updating the exams and we couldn't really tell which model was better anymore.

All these benchmarks of exams - scientific reasoning, physics proficiency - they didn't make sense anymore because all the models were better than the exams themselves.

One Research

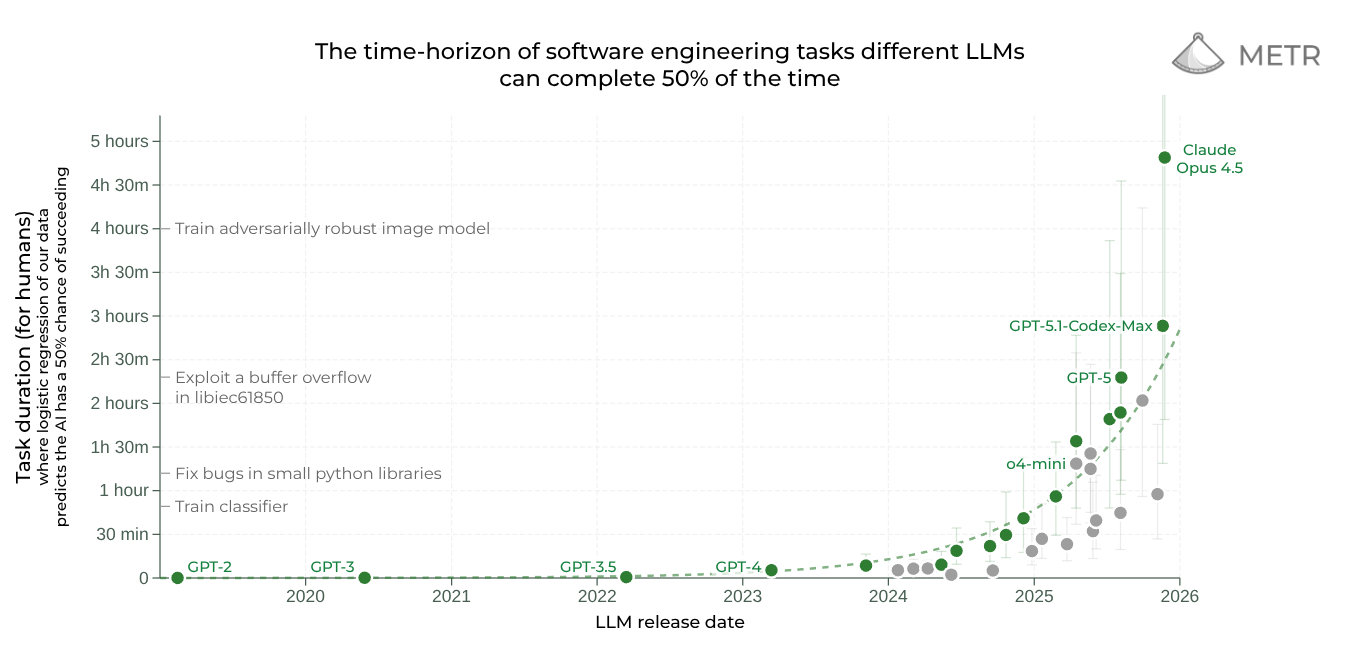

Then one research paper came out that really caught me. I think we were in Georgia when it happened. That research said: "We have a bunch of tasks that we know how much time they're going to take a human to do, a software engineer to do. Now we're examining the models - what's the likelihood they're able to do that task properly like a human more than 50% of the time?" Like if we give them the task 1,000 times, they'll do 500 or more times correctly.

And they found out that this graph is exponential.

Every seven months, there's one new model that is 2x better than the previous one.

We're in a cycle where every seven months we're doubling how much time one request through an LLM can save us.

Grocery

That's pretty much what I saw when I was going to the groceries. Imagine you're in a foreign country, you don't know the language, and you see some milk. You want to know if this is oat milk or almond milk and which one is better for you to buy.

In the past, I would just buy one or not buy anything at all because I didn't want to open up Google and look for the answer - it was going to take too much time, like 5, 7, 15 minutes to really come up with an answer.

But now I just take a picture and send it to Gemini. I wait 20, 30 seconds, and Gemini comes back with the answer. Perplexity, Gemini, ChatGPT, whatever.

That was the moment of realization: the LLM saved me 15 minutes of search. Now I can have the answer in 20 seconds instead of 15 minutes.

Our Tasks

Building a landing page - in the past it would take two months, right? But now it takes less than a day. Get what I'm saying?

The models are going to keep improving and they're going to save us more and more time.

Think about this: if you had to do a thesis for your post-doctorate, PhD, how much time would it take you now versus two years ago, three years ago? Incomparable. Significantly less. It's to the point where it's unimaginable to think how difficult that was to do a thesis.

I can no longer imagine myself doing that kind of work without an AI assistant. Oh my god.

Writing a book, doing a podcast, posting a blog post - all this type of stuff.

I really like this way of looking at AI progress: not who is the smartest model, but how much time does this model save us.

Opus

Now, the latest model - and I just saw it, and that's why it's interesting - is by Claude. Their model is called Opus 4.5, the best of the best of the best of the models. Very deep, very big, very large model. A thinking model.

You know how much time there's a likelihood of more than 50% that it will produce the results? How much time it would take a software engineer to solve this kind of problem?

4.5 hours.

In one request, Opus can do 4.5 hours of software engineering work.

Our Usage

Now the question to you and for me as well: How are we using the models?

Are we sending them something they can work on for two hours of our time? Are we using their full capabilities? Or are we just using them for 15-minute work?

Because it's so rapidly changed.

In seven months, we will have a model capable of doing nine hours of work. You get that?

And it doesn't matter how much time it's going to take the model. But the fact that the model is capable of doing that takes so much out of us.

Even if it takes two hours for the model to finish a 4.5 hour task, it's okay. As long as we don't do it. Oh my God.

The Future

Thinking about the consequences and how it's going to look in the future is... wild.

Wild.

I don't know. I don't know how it's going to look.

It's wild.